Rethinking Efficiency

In his landmark 1776 work The Wealth of Nations, Adam Smith showed that a clever division of labor could make a commercial enterprise vastly more productive than if each worker took personal charge of constructing a finished product. Four decades later, in On the Principles of Political Economy and Taxation, David Ricardo took the argument further with his theory of comparative advantage, asserting that because it is more efficient for Portuguese workers to make wine and English workers to make cloth, each group would be better off focusing on its area of advantage and trading with the other.

These insights both reflected and drove the Industrial Revolution, which was as much about process innovations that reduced waste and increased productivity as it was about the application of new technologies. The notions that the way we organize work can influence productivity more than individual effort can and that specialization creates commercial advantage underlie the study of management to this day. In that sense Smith and Ricardo were the precursors of Frederick Winslow Taylor, who introduced the idea that management could be treated as a science—thus starting a movement that reached its apogee with W. Edwards Deming, whose Total Quality Management system was designed to eliminate all waste in the production process.

Smith, Ricardo, Taylor, and Deming together turned management into a science whose objective function was the elimination of waste—whether of time, materials, or capital. The belief in the unalloyed virtue of efficiency has never dimmed. It is embodied in multilateral organizations such as the World Trade Organization, aimed at making trade more efficient. It is ensconced in the Washington Consensus via trade and foreign direct-investment liberalization, efficient forms of taxation, deregulation, privatization, transparent capital markets, balanced budgets, and waste-fighting governments. And it is promoted in the classrooms of every business school on the planet.

Eliminating waste sounds like a reasonable goal. Why would we not want managers to strive for an ever-more-efficient use of resources? Yet as I will argue, an excessive focus on efficiency can produce startlingly negative effects, to the extent that superefficient businesses create the potential for social disorder. This happens because the rewards arising from efficiency get more and more unequal as that efficiency improves, creating a high degree of specialization and conferring an ever-growing market power on the most-efficient competitors. The resulting business environment is extremely risky, with high returns going to an increasingly limited number of companies and people—an outcome that is clearly unsustainable. The remedy, I believe, is for business, government, and education to focus more strongly on a less immediate source of competitive advantage: resilience. This may reduce the short-term gains from efficiency but will produce a more stable and equitable business environment in the long run. I conclude by describing what a resilience agenda might involve.

To understand why an unrelenting focus on efficiency is so dangerous, we must first explore our most basic assumptions about how the rewards from economic activities are distributed.

Outcomes Aren’t Really Random

When predicting economic outcomes—incomes, profits, and so forth—we often assume that any payoffs at the individual level are random: dictated by chance. Of course, this is not actually so; payoffs are determined by a host of factors, including the choices we make. But those factors are so complex that as far as we can tell, economic outcomes might as well be determined by chance. Randomness is a simplifying assumption that fits what we observe.

If economic outcomes are random, statistics tells us that they will follow a Gaussian distribution: When plotted on a graph, the vast majority of payoffs will be close to the average, with fewer and fewer occurring the further we move in either direction. This is sometimes known as a normal distribution, because many things in our world follow the pattern, including human traits such as height, weight, and intelligence. It is also called a bell curve, for its shape. As data points are added, the whole becomes ever more normally distributed.

Superefficient businesses create

the potential for social disorder.

Because the Gaussian distribution is so prevalent in human life and in nature, we tend to expect it across domains. We believe that outcomes are and should be normally distributed—not just in the physical world but in the world writ large.

For example, we expect the distributions of personal incomes and firm performance within industries to be roughly Gaussian, and we build our systems and direct our actions accordingly. The classic way to think about an industry, however defined, is that it will have a small number of winners, a small number of losers (who are probably going out of business), and lots of competitors clustered in the middle. In such an environment, most efficiency gains are swiftly erased as others adopt them, and as firms fail, new ones replace them. This idealized form of competition is precisely what antitrust policy seeks to achieve. We don’t want any single firm to grow so big and powerful that it shifts the distribution out of whack. And if the outcomes do follow a random distribution, and competitive advantage does not endure for long, competing on efficiency is sustainable.

But evidence doesn’t justify the assumption of randomness in economic outcomes. In reality, efficiency gains create an enduring advantage for some players, and the outcomes follow an entirely different type of distribution—one named for the Italian economist Vilfredo Pareto, who observed more than a century ago that 20% of Italians owned 80% of the country’s land. In a Pareto distribution, the vast majority of incidences are clustered at the low end, and the tail at the high end extends and extends. There is no meaningful mean or median; the distribution is not stable. Unlike what occurs in a Gaussian distribution, additional data points render a Pareto distribution even more extreme.

That happens because Pareto outcomes, in contrast to Gaussian ones, are not independent of one another. Consider height—a trait that, as mentioned, tracks a Gaussian distribution. One person’s shortness does not contribute to another person’s tallness, so height (within each sex) is normally distributed. Now think about what happens when someone is deciding whom to follow on Instagram. Typically, he or she looks at how many followers various users have. People with just a few don’t even get into the consideration set. Conversely, famous people with lots of followers—for example, Kim Kardashian, who had 115 million at last count—are immediately attractive candidates because they already have lots of followers. The effect—many followers—becomes the cause of more of the effect: additional followers. Instagram followership, therefore, tracks a Pareto distribution: A very few people have the lion’s share of followers, and a large proportion of people have only a few. The median number of followers is 150 to 200—a tiny fraction of what Kim Kardashian has.

The same applies to wealth. The amount of money in the world at any one moment is finite. Every dollar you have is a dollar that is not available to anyone else, and your earning a dollar is not independent of another person’s earning a dollar. Moreover, the more dollars you have, the easier it is to earn more; as the saying goes, you need money to make money. As we’re often told, the richest 1% of Americans own almost 40% of the country’s wealth, while the bottom 90% own just 23%. The richest American is 100 billion times richer than the poorest American; by contrast, the tallest American adult is less than three times as tall as the shortest—demonstrating again how much wider the spread of outcomes is in a Pareto distribution.

We find a similar polarization in the geographic distribution of wealth. The rich are increasingly concentrated in a few places. In 1975, 21% of the richest 5% of Americans lived in the richest 10 cities. By 2012 the share had increased to 29%. The same holds for incomes. In 1966 the average per capita income in Cedar Rapids, Iowa, was equal to that in New York City; now it is 37% behind. In 1978 Detroit was on a par with New York City; now it is 38% behind. San Francisco was 50% above the national average in 1980; now it is 88% above. The comparable figures for New York City are 80% and 172%.

Business outcomes also seem to be shifting toward a Pareto distribution. Industry consolidation is increasingly common in the developed world: In more and more industries, profits are concentrated in a handful of companies. For instance, 75% of U.S. industries have become more concentrated in the past 20 years. In 1978 the 100 most profitable firms earned 48% of the profits of all publicly traded companies combined, but by 2015 the figure was an incredible 84%. The success stories of the so-called new economy are in some measure responsible—the dynamics of platform businesses, where competitive advantages often derive from network effects, quickly convert Gaussian distributions to Pareto ones, as with Kim Kardashian and Instagram.

The Growing Power of the Few

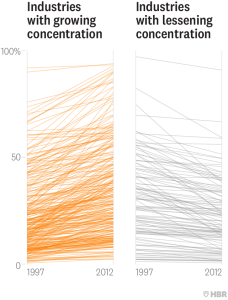

Since 1997 a strong majority of industries in the United States have become more concentrated. Many are now what economists consider “highly concentrated.” This tends to correlate with low levels of competition, high consumer prices, and high profit margins.

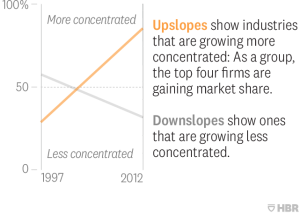

Key: How Concentration Is Calculated

The portion of an industry that is controlled by the top four firms indicates that industry’s concentration—a measure that changes over time.

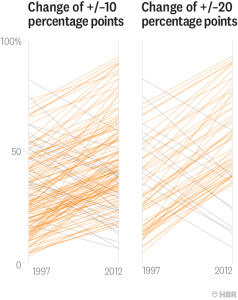

Overall, Concentration Is Increasing…

Plotting the change in concentration of more than 850 U.S. industries from 1997 to 2012 reveals upslopes in two-thirds of cases and downslopes in one-third. The large gap at the top of the downslope chart indicates that nearly all the industries that were highly concentrated in 1997 maintained or increased their concentration and that many industries are now very highly concentrated indeed.

…Especially When There Are Big Shifts in the Power of the Top Firms

During that time 285 industries (about a third of those studied) were “big movers”—the market share of the top four firms changed by at least 10 percentage points. Of those, 216 became more concentrated and 69 became less so.

The pattern is even more pronounced among the 92 “very big movers” (for which the market share of the top four firms changed by at least 20 percentage points)—and all but 10 of those industries became more concentrated.

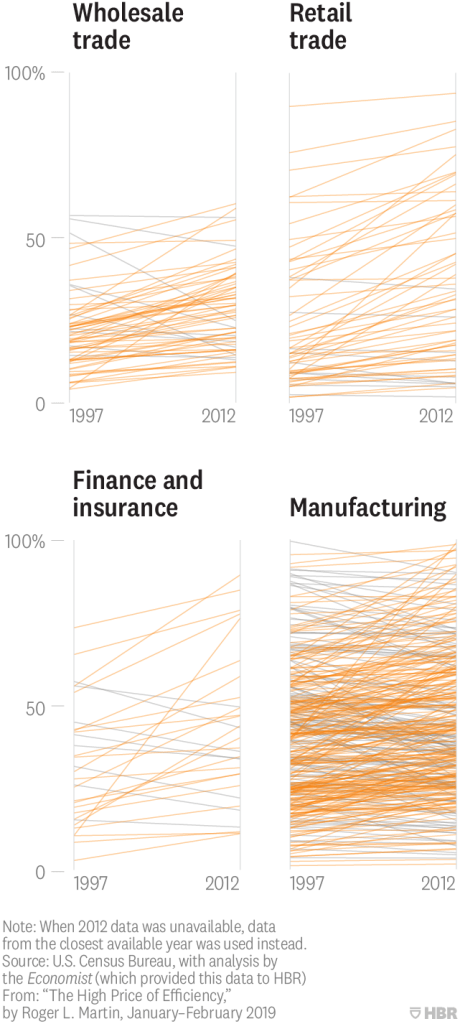

The Pattern Holds on a Sector Level

Aggregating the data, we see that entire sectors are becoming more concentrated. Here’s what the four largest look like.

Let’s examine how the quest for efficiency fits into this dynamic, along with the role of so-called monocultures and how power and self-interest lead some players to game the system, with corrosive results.

The Pressure to Consolidate

Complexity scholars, including UCLA’s Bill McKelvey, have identified several factors that systematically push outcomes toward Pareto distributions. Among them are pressure on the system in question and ease of connection between its participants. Think about a sandpile—a favorite illustration of complexity theorists. You can add thousands of grains of sand one by one without triggering a collapse; each grain has virtually no effect. But then one additional grain starts a chain reaction in which the entire pile collapses; suddenly a single grain has a huge effect. If the sandpile were in a no-gravity context, however, it wouldn’t collapse. It falls only as gravity pulls that final grain down, jarring the other grains out of position.

In business outcomes, gravity’s equivalent is efficiency. Consider the U.S. waste-management industry. At one time there were thousands of little waste-management companies—garbage collectors—across the country. Each had one to several trucks serving customers on a particular route. The profitability of those thousands of companies was fairly normally distributed. Most clustered around the mean, with some highly efficient and bigger companies earning higher profits, and some weaker ones earning lower profits.

The 100 most profitable U.S. firms

earn 84% of the profits of all public firms.

Then along came Wayne Huizenga, the founder of Waste Management (WM). Looking at the cost structure of the business, he saw that two big factors were truck acquisition (the vehicles were expensive, and because they were used intensively, they needed to be replaced regularly) and maintenance and repair (intensive use made this both critical and costly). Each small player bought trucks one or maybe a handful at a time and ran a repair depot to service its little fleet.

Huizenga realized that if he acquired a number of routes in a given region, two things would be possible. First, he would have much greater purchasing leverage with truck manufacturers and could acquire vehicles more cheaply. Second, he could close individual maintenance facilities and build a single, far more efficient one. As he proceeded, the effect—greater efficiency—became the cause of more of the effect. Huizenga generated the resources to keep buying small garbage companies and expanding into new territories, which made WM bigger and more efficient still. This put competitive pressure on all small operators, because WM could come into their territories and underbid them. Those smaller firms could either lose money or sell to WM. Huizenga’s success represented a huge increase in pressure on the system.

Like a collapsing sandpile, the industry quickly consolidated, with WM as the dominant player, earning the highest profits; fellow consolidator Republic Services as the second-largest player, earning decent profits; several considerably smaller would-be consolidators earning few returns; and lots of tiny companies mainly operating at subsistence levels. The industry today is structured as a Pareto distribution, with WM as winner-take-most. The company earned more than $14 billion in 2017; Huizenga died (in March 2018) a multibillionaire.

If WM is so highly efficient, why should we object? Don’t all consumers benefit, and does it matter whether WM or a collection of small firms issues sanitation workers’ paychecks? The answer is that a superefficient dominant model elevates the risk of catastrophic failure. To understand why, we’ll turn to an example from agriculture.

The Problem with Monocultures

Almonds were once grown in a number of places in America. But some locations proved better than others, and as in most production contexts, economies of scale could be had from consolidation. As it turns out, California’s Central Valley is perfect for almond growing, and today more than 80% of the world’s almonds are produced there. This is a classic business example of what biologists call a monoculture: A single factory produces a product, a single company holds sway in an industry, a single piece of software dominates all systems.

Such efficiency comes at a price. The almond industry designed away its redundancies, or slack, and in the process it lost the insurance that redundancy provides. One extreme local weather event or one pernicious virus could wipe out most of the world’s production.

And consolidation has knock-on effects. California’s almond blossoms all need to be pollinated in the same narrow window of time, because the trees grow in the same soil and experience the same weather. This necessitates shipping in beehives from all over America. At the same time, widespread bee epidemics have created concern about the U.S. population’s ability to pollinate all the plants that need the bees’ work. One theory about the epidemics is that because hives are being trucked around the country as never before for such monoculture pollinations, the bees’ resistance has been weakened.

Power and Self-Interest

As we saw with WM, another result of efficient systems is that the most efficient player inevitably becomes the most powerful one. Given that people operate substantially out of self-interest, the more efficient a system becomes, the greater the likelihood that efficient players will game it—and when that happens, the goal of efficiency ceases to be the long-term maximization of overall societal value. Instead, efficiency starts to be construed as that which delivers the greatest immediate value to the dominant player.

You can see this dynamic in the capital markets, where key corporate decision makers make common cause with the largest shareholders. It goes like this: Institutional investors support stock-based compensation for senior executives. The executives then take actions to reduce payroll and cut back on R&D and capital expenditures, all in the name of efficiency. The immediate savings boost cash flow and consequently cause the stock price to spike. Those investors—especially actively trading hedge funds—and executives then sell their holdings to realize short-term gains, almost certainly moving back in after the resulting decline in price. Their gains come at a cost. The most obvious losers are employees who are laid off because of the company’s flagging fortunes. But long-term shareholders also lose, because the company’s future is imperiled. And customers suffer in terms of product quality, which is threatened as the company reduces its investment in making improvements.

A superefficient dominant model elevates

the risk of catastrophic failure.

Advocates of shareholder value argue that competition from entrants with superior products and services will compensate: The newcomers will employ the laid-off workers, customers will flock to their products, and shareholders will switch to the investments that promise better returns. But this assumes that the market is highly dynamic and that power is not concentrated among a handful of players. Those assumptions are valid in some sectors. The airline industry is one: The main assets—planes and gates—are relatively easy to acquire and dispose of, so whenever demand rises, new players can enter. But it is not easy to start a bank, build a chip factory, or launch a telecom company. (Ironically, entry is perhaps most difficult in some of the hottest areas of the new economy, where competitive advantage is often tied up with network effects that give incumbents a powerful boost.) And sometimes power becomes so concentrated that political action is needed to loosen the stranglehold of the dominant players, as in the antitrust movement of the 1890s.

The pension fund business provides a particularly egregious case of abuse by dominant insiders. In theory, fund managers should compete on the quality of their long-term investment decisions, because that is what delivers value to pensioners. But 19 of the 25 biggest U.S. pension funds, accounting for more than 50% of the assets of the country’s 75 largest pension funds, are government-created and -regulated monopolies. Their customers have no choice of provider. If you are a teacher in Texas, the government mandates that the Teacher Retirement System of Texas—a government agency—manage your retirement assets. Fund managers’ jobs, therefore, are relatively secure as long as they don’t screw up in some obvious and public way. They are well placed to game the system.

The most straightforward way to do so is to accept inducements (typically offered by hedge funds) to invest in a particular way (one that benefits the hedge funds). In the past 10 years alone, senior executives of two of America’s largest pension funds (government monopolies, I might add) were successfully prosecuted for taking multimillion-dollar bribes from hedge funds. We can assume that for each occurrence we see, many more escape our scrutiny—and the bribery isn’t always so blatant, of course. Pension fund managers also accept luxurious trips they couldn’t afford on their own, and many have left their positions for lucrative jobs at investment banks or hedge funds.

A particularly insidious pension-fund practice is lending stock to short-selling hedge funds (pension funds are the largest such lenders), in return for which the funds’ managers earn relatively modest fees that help them meet their returns goals. The practice lets hedge funds create volatility in the capital markets, generating opportunities for traders but compromising the ability of company leaders to manage for the long term. Pensioners suffer while hedge funds and pension fund managers benefit.

If a system is highly efficient,

odds are that efficient players will game it.

The invisible hand of competition steers self-interested people to maximize value for all over the long term only in very dynamic markets in which outcomes really are random. And the process of competition itself works against this as long as it is focused exclusively on short-term efficiency, which, as we have seen, gives some players an advantage that often proves quite durable. As those players gain market share, they also gain market power, which makes it easier for them to gain value for their own interests by extracting rather than creating it.

How can society prevent the seemingly inevitable process of efficient entropy from taking hold? We must pay more attention to the less appreciated source of competitive advantage mentioned earlier: resilience.

Toward Resilience

Resilience is the ability to recover from difficulties—to spring back into shape after a shock. Think of the difference between being adapted to an existing environment (which is what efficiency delivers) and being adaptable to changes in the environment. Resilient systems are typically characterized by the very features—diversity and redundancy, or slack—that efficiency seeks to destroy.

To curb efficiency creep and foster resilience, organizations can:

Limit scale.

In antitrust policy, the trend since the early 1980s has been to loosen enforcement so as not to impede efficiency. In fact, in the United States and the European Union, “increase in efficiency” is considered a legitimate defense of a merger challenged on the grounds that it would lead to excess concentration—even if the benefits of that efficiency gain would accrue to just a few powerful players.

We need to reverse that trend. Market domination is not an acceptable outcome, even if achieved through legitimate means such as organic growth. It isn’t good for the world to have Facebook use its deep pockets from its core business to fund its Instagram subsidiary to destroy Snapchat. It isn’t good to have Amazon kill all other retailers. It wasn’t good to have Intel try to quash AMD decades ago by giving computer manufacturers discounts for not using AMD chips, and it wasn’t good to have Qualcomm engage in similar behavior in recent years. Our antitrust policy needs to be much more rigorous to ensure dynamic competition, even if that means lower net efficiency.

Introduce friction.

In our quest to make our systems more efficient, we have driven out all friction. It is as if we have tried to create a perfectly clean room, eradicating all the microbes therein. Things go well until a new microbe enters—wreaking havoc on the now-defenseless inhabitants.

To avoid such a trap, business and government need to engage in regular immunotherapy. Rather than design to keep all friction out of the system, we should inject productive friction at the right times and in the right places to build up the system’s resilience.

For example, lower barriers to international trade should not be seen as an unalloyed good. Although David Ricardo clearly identified the efficiency gains from trade, he did not anticipate the impact on Pareto outcomes. Policy makers should deploy some trade barriers to ensure that a few massive firms don’t dominate national markets, even if such domination appears to produce maximum efficiency. Small French baguette bakers are protected from serious competition by a staggering array of regulations. The result: Although not cheap, French baguettes are arguably the best in the world. Japan’s nontariff barriers make it nearly impossible for foreign car manufacturers to penetrate the market, but that hasn’t stopped Japan from giving rise to some of the most successful global car companies.

Friction is also needed in the capital markets. The current goal of U.S. regulators is to maximize liquidity and reduce transaction costs. This has meant that they first allowed the New York Stock Exchange to acquire numerous other exchanges and then allowed the NYSE itself to be acquired by the Intercontinental Exchange. A fuller realization of this goal would increase the pace at which the billionaire hedge-fund owners already at the far end of the Pareto distribution of wealth trade in fewer but ever bigger markets and generate even-more-extreme Pareto outcomes. U.S. regulators should act more like the EU, which blocked the merger of Europe’s two biggest players, the London Stock Exchange and the Deutsche Börse. And they should stop placing obstacles in the way of new players seeking to establish new exchanges, because those obstacles only solidify the power of consolidated players. In addition, short selling and the volatility it engenders could be dramatically reduced if the government prohibited public sector pension funds (such as the California Public Employees’ Retirement System and the New York State Common Retirement Fund) from lending stock.

Promote patient capital.

Common equity is supposed to be a long-term stake: Once it is given, the company notionally has the capital forever. In practice, however, anybody can buy that equity on a stock market without the company’s permission, which means that it can be a short-term investment. But long-term capital is far more helpful to a company trying to create and deploy a long-term strategy than short-term capital is. If you give me $100 but say that you can change how it is to be used with 24 hours’ notice, that money isn’t nearly as valuable to me as if you said I can use it as I want for 10 years. If Warren Buffett’s desired holding period for stock is, as he jokes, “forever,” while the quantitative arbitrage hedge fund Renaissance Technologies holds investments only for milliseconds, Buffett’s capital is more valuable than that of Renaissance.

The difference in value to the company notwithstanding, the two types of equity investments are given exactly the same rights. That’s a mistake; we should base voting rights on the period for which capital is held. Under that approach, each common share would give its holder one vote per day of ownership up to 3,650 days, or 10 years. If you held 100 shares for 10 years, you could vote 365,000 shares. If you sold those shares, the buyer would get 100 votes on the day of purchase. If the buyer became a long-term holder, eventually that would rise to 365,000 votes. But if the buyer were an activist hedge fund like Pershing Square, whose holding period is measured in months, the interests of long-term investors would swamp its influence on strategy, quite appropriately. Allocating voting rights in this way would reward long-term shareholders for providing the most valuable kind of capital. And it would make it extremely hard for activist hedge funds to take effective control of companies, because the instant they acquired a share, its rights would be reduced to a single vote.

Some argue that this would entrench bad management. It would not. Currently, investors who are unhappy with management can sell their economic ownership of a share along with one voting right. Under the proposed system, unhappy investors could still sell their economic ownership of a share along with one voting right. But if a lot of shareholders were happy with management and yet an activist wanted to make a quick buck by forcing the company to sell assets, cut R&D investment, or take other actions that could harm its future, that activist would have a reduced ability to collect the voting rights to push that agenda.

Create good jobs.

In our pursuit of efficiency, we have come to believe that routine labor is an expense to be minimized. Companies underinvest in training and skill development, use temporary and part-time workers, tightly schedule to avoid “excess hours,” and design jobs to require few skills so that they can be exceedingly low paid. This ignores the fact that labor is not just a cost; it is a resource that can be productive—and the current way of managing it drives down that productivity as it reduces the dollar cost.

What if we focused on longer-term productivity? Instead of designing jobs for low-skill, minimum-wage clock punchers, what if we designed them to be productive and valuable? In The Good Jobs Strategy, MIT’s Zeynep Ton describes how some discount retailers have doubled down on their employees, seeking more-engaged and more-knowledgeable workers, better customer service, lower turnover, and increased sales and profits, all leading to further investment. A key but counterintuitive element of the strategy is to build in slack so that employees have time to serve customers in unanticipated yet valuable ways.

It’s not just businesses that can benefit from a good jobs strategy. The cheap labor model is extremely costly to the wider economy. When they cut labor costs, companies such as Walmart simply transfer expenses traditionally borne by employers to taxpayers. A recent congressional study evaluated the impact of a single 200-person Walmart store on the federal budget. It turns out that each employee costs taxpayers $2,759 annually (in 2018 dollars) for benefits necessitated by the low wages, such as food and energy subsidies, housing and health care assistance, and federal tax credits. With 11,000 stores and 2.3 million employees, the company’s much-touted labor efficiency carries a hefty price tag indeed.

Teach for resilience.

Management education focuses on the single-minded pursuit of efficiency—and trains students in analytic techniques that deploy short-term proxies for measuring that quality. As a result, graduates head into the world to build (inadvertently, I believe) highly efficient businesses that largely lack resilience.

Management deans, professors, and students would undoubtedly beg to differ. But the curricula show otherwise. Finance teaches the pursuit of efficient financial structures. Efficient cost management is the goal of management accounting. Human resources teaches efficient staffing. Marketing is about the efficient targeting of and selling to segments. Operations management is about increasing plants’ efficiency. The overarching goal is the maximization of shareholder value.

Of course, none of these in itself is a bad thing. A corporation should maximize shareholder value—in the very long term. The problem is that today’s market capitalization is what defines shareholder value. Similarly, this quarter’s reductions in labor costs are what define efficiency. And the optimal capital structure for this year’s operating environment is what defines an efficient deployment of capital. Those are all short-term ways of assessing long-term outputs.

If we continue to promote these short-term proxies, managers will seek to maximize them, despite the cost to long-term resilience. And activist hedge funds will take control of companies and cause them to act in ways that appear, if judged by short-term proxies, to be highly efficient. Those funds will be applauded by regulators and institutional proxy voting advisers, all of whom will continue to think their actions have nothing to do with the production of more-fragile companies.

For the sake of the future of democratic capitalism, management education must become a voice for, not against, resilience.

CONCLUSION

In his 1992 work The End of History and the Last Man, Francis Fukuyama argued that the central theme of modern history is the struggle between despotism and what we now know as democratic capitalism. The latter certainly has the upper hand. But it’s a stretch to claim, as Fukuyama did, that it has won the war. Every day we find evidence that economic efficiency, which has traditionally underpinned democratic capitalism, is failing to distribute the concomitant gains. The stark realities of the Pareto distribution threaten the electorate’s core belief that the combination of democracy and capitalism can make the lives of a majority of us better over time. Our system is much more vulnerable and much less fair than we like to think. That needs to change.

Comments are closed.